How can electrons be “topological”?

The following text is an excerpt from a draft of an article that I co-wrote for the magazine Physics Today, together with Prof. Art Ramirez at UC Santa Cruz. The article will appear (edited, and with more professional figures) in the magazine in September. The article attempts to give some intuition about the concept of “topological” electron bands, which have become very important in modern condensed matter physics.

Dawn of the Topological Age?

Historians often label epochs of human history according to their material technologies. For example, we have the bronze age, the iron age and, more recently, the silicon age. From the physicist’s perspective, the silicon age began with the interplay of theory, experiment, and device prototyping of a new type of material, the semiconductor. Semiconductors had been known since the late 1800s as materials with unusual sensitivity to light, to the method of synthesis, and to the direction of current flow. It was not until the early 1930s, however, that a theoretical understanding of semiconductors approached its modern form [1]. The prevailing view at the time saw metals and insulators as opposite limits of electron itineracy, adiabatically controlled by the probability for an electron to hop between atoms. But the recently-developed idea of accessible electron energies (bands) and inaccessible energies (band gaps) provided a natural category for semiconductors – they were more like insulators, but with smaller band gaps, often controlled by impurities. The next fifteen years witnessed breakthroughs in the purification and control of dopants in the elemental semiconductors silicon and germanium that eventually enabled the discovery of transistor action at Bell Labs in 1947. A surprise came during the transistor patent preparation, however. The basic idea underlying the field effect transistor had already been patented in 1930 by Julius Lillienfeld, an Austrian-Hungarian physicist who had emigrated the United States in 1921.

For semiconductors, the path from materials discovery to device implementation was neither linear nor easily predicted. For a relatively new class of materials, called “topological” materials, one can notice compelling analogies with the development of semiconductors, suggesting the tantalizing possibility that we are at the dawn of the “topological age”. In this article, we will describe what it means for materials to be “topological,” and why topology raises the prospect for revolutionary new devices.

The “shape” of an electron band

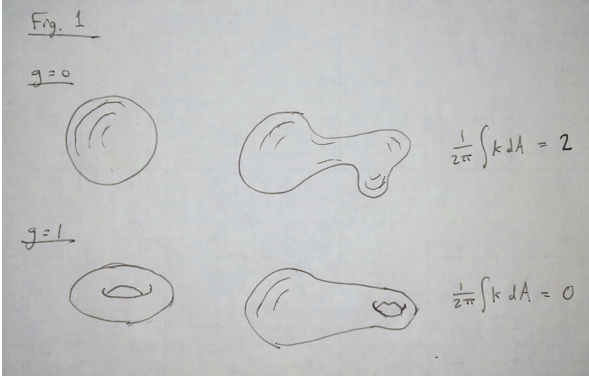

The notion of a topological invariant comes from a branch of mathematics called topology, which studies properties of geometric objects that are conserved under continuous deformations. The most famous such property is the genus, g, which is an integer that counts the number of holes in a three-dimensional (3D) shape (i.e., g = 0 for a sphere or a plate, g = 1 for a donut or a coffee mug, g = 3 for a pretzel, etc.). The genus is defined via the Gauss-Bonnet theorem, which states that the integral of Gaussian curvature K over the surface S of an object is quantized:

Here is an integer that is related to the genus by

. For example, for a sphere with radius

, the curvature

is a uniform constant, so the integral over the surface area gives

, which is consistent with the genus

of an object that has no holes in it. The remarkable implication of the Gauss-Bonnet theorem is that, if one stretches the sphere in any arbitrary way, so that some parts of the surface become more curved and other parts become flatter, the integer n remains unchanged. The integrated curvature is invariant (see Fig. 1).

Fig. 1 A topological invariant is a property of a geometric shape that does not change when the shape is stretched or distorted. One such invariant is the genus g, which is defined by the number of holes in the surface and is related to the integral of the Gaussian curvature K over the surface of the shape. For example, shapes with no holes in them (g = 0) all give the same value of this integral, as do shapes with one hole in them (g = 1).

Much of the recent excitement surrounding “topological electronics” originates in the prospect of finding physical properties of electronic systems that behave like this integer-valued genus. Such an invariant property is necessarily robust to small perturbations or defects, since integers cannot change continuously. In the remainder of this section we will explain the origin of a commonly-discussed invariant, the Chern number, which is what defines a “topological electron band”.

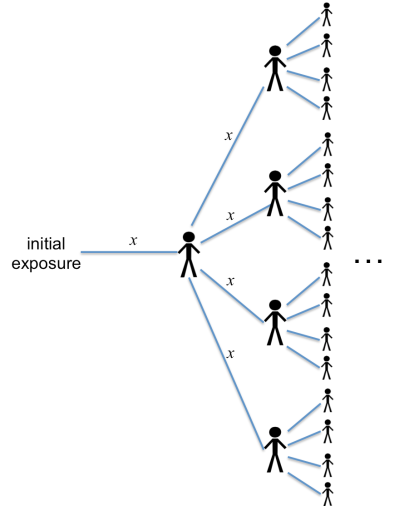

Within a single isolated atom, electrons occupy discrete quantum energy levels, or orbitals. When many atoms are arranged into a crystal, the wave functions from neighboring atoms hybridize with each other, and the orbitals broaden into bands of states having a range of energies. Each of the states within a band describes an electron that is shared among many atoms, and its wave function can be written in terms of the momentum with which the electron hops from one atom to another:

.

The factor in this wave function resembles the usual “plane wave” state that describes free electrons. But this plane wave is multiplied by a function

, called the “Bloch function” (after Felix Bloch [3]), that repeats periodically for each identical unit (the unit cell) of the crystal and describes the way that the electron is affected by atomic nuclei within the unit cell. As we will see, it is the Bloch function that contains information about the topology of the electron band.

Importantly, the electron momentum can take only certain restricted values. Since

describes the process of hopping between neighboring crystal lattice sites, the de Broglie wavelength

associated with the wave function cannot be shorter than the distance between neighboring unit cells of the crystal. Thus, in a given direction, the momentum has a maximum possible magnitude. The space of allowable momenta is called the Brillouin zone, and its shape depends on the arrangement of atoms in the crystal. In discussing the “topology” of an electron band, the Brillouin zone plays the role of a “geometric space”, and closed surfaces within the Brillouin zone are akin to geometric shapes, which can have an integer-valued index akin to the genus. Importantly, exactly opposite points on the zone boundary are equivalent to each other, since they correspond to the same standing wave state with minimal de Broglie wavelength (effectively, the Brillouin zone has periodic boundary conditions).

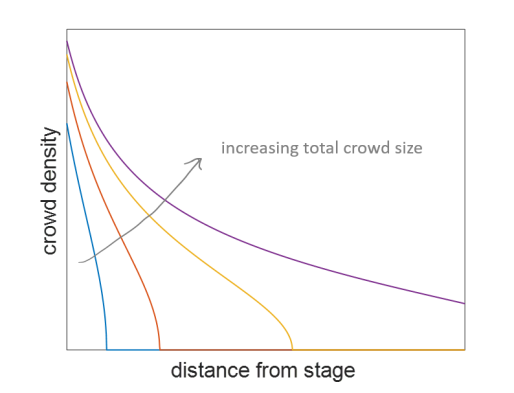

Constructing an analogy with the Gauss-Bonnet theorem for electron bands requires an analog of the “curvature” that is integrated over a closed surface. As it turns out, this analog of curvature arises from the properties of the Bloch functions . To see the analogy, consider asking the question: for a given momentum

, where within the unit cell is the electron wave function centered? The usual way to answer this question is to calculate the expectation value of the position operator

within the unit cell. Written in terms of momentum, the position operator is

, and one can write its expectation value as

.

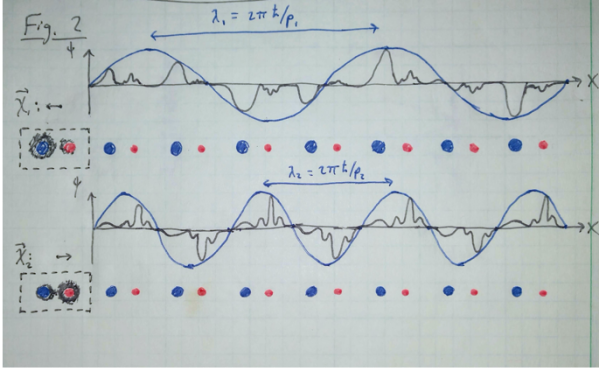

This quantity is called the “Berry connection”, and it can be thought of as the momentum-dependent centroid of the electron wave function in real space (see Fig. 2). One caveat is that

is not precisely defined because its definition is not gauge-invariant. The Bloch functions

are defined only up to an overall phase (a multiplicative factor

) that can be momentum-dependent. Thus the Berry connection is like the vector potential in problems with a magnetic field, and only its curl has a physical meaning. We will show below that this analogy with magnetic field runs much deeper.

Fig. 2 Inside a crystal, the “free particle” electron states are described by a slowly-oscillating plane wave (blue curve) that is modulated by Bloch functions (black curve), which describe the electron’s attraction to the atoms (red and blue circles) within the repeating unit cell of the crystal. For a given momentum state, the electron probability density (shaded black areas on the left) is shared among the atoms within the unit cell in way that can depend on the momentum . The Berry connection

is a vector that can be thought of as the centroid location of the electron within the unit cell, and it varies as a function of

.

Imagine now the hypothetical process of accelerating and then decelerating an electron in such a way that the electron returns to its initial momentum. During the course of this cyclic process the electron traces out a path in momentum space, and its final state is identical to its initial state except for a possible overall phase factor. This phase is an example of what is called a Berry phase and its value is

(This expression for the phase is analogous to how a particle traversing a path in position space experiences a phase shift , equal to the number of wavelengths in the path multiplied by

) Traversing the path

in the clockwise and counterclockwise directions leads to opposite signs for the phase

, since the direction of momentum change along the path is opposite in the two cases.

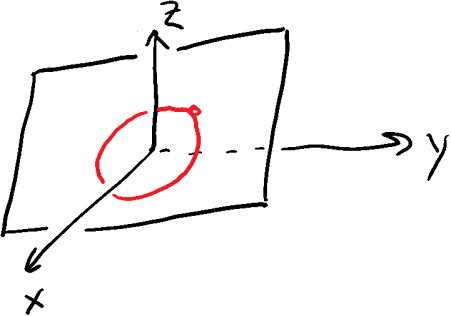

Fig. 3 The set of all possible momenta p for electrons within the crystal defines a Brillouin zone (denoted BZ, and illustrated for a 2D system by the black square). Opposite edges of the BZ describe the same wave function, and therefore are equivalent to each other. The Berry connection is a vector field within the BZ (black arrows). If an electron is accelerated and decelerated along some closed path P (red loop), its wave function acquires an overall phase

whose sign depends on the direction of the path, clockwise or counterclockwise. A closed path along the BZ boundary gives an overall phase that must be a multiple of

. This requirement can be seen as a consequence of the ambiguity in the direction of the path. For example, the thick red arrow at the top boundary is equivalent to the dashed blue arrow at the bottom boundary, and thus the path around the boundary can be described as either clockwise or counterclockwise.

The Berry phase becomes particularly instructive if we consider how it behaves for paths on a closed two-dimensional (2D) surface of momenta. For example, the Brillouin zone of a 2D system effectively acts like a closed surface because opposite edges of the zone are equivalent to each other. Consider, in particular, the path shown in Fig. 3, which traverses the Brillouin zone boundary of a 2D system. Traversing this path in the clockwise direction yields some Berry phase , while the counterclockwise direction gives

. However, because opposite edges of the zone boundary describe physically equivalent states, the clockwise and counterclockwise paths must produce equivalent changes to the wave function. The only way this requirement can be satisfied is if either

, or

is an integer multiple of

, so that the phase factor multiplying the wave function becomes equal to unity. This quantization of the Berry phase in units of

can be turned into a more exact analog of the Gauss-Bonnet theorem by using Stokes’ Theorem to relate the contour integral along the boundary of the Brillouin zone to a surface integral across the entire Brillouin zone. This procedure gives

where is called the Berry curvature, and

is an integer called the Chern number.

One can notice that, even though local symmetries do not define the Chern number, having requires that certain symmetries must be broken. In particular, one can see from Fig. 3 that within the Brillouin zone of a system with nonzero Chern number the momenta

and

are not equivalent, in the sense that they have different values of the Berry connection

. Such an inequivalence requires the system to break the symmetry with respect to inversion (inverting the spatial coordinates of the system) or time reversal (playing electron trajectories backward in time). Thus, the search for topological materials has largely focused on materials that break one of these two symmetries. In fact, as we show below, only systems with broken time reversal symmetry can have a nonzero Chern number, and breaking inversion symmetry alone is insufficient. However, the coupling between electron spin and momentum may allow a given spin species (up or down) to have a nonzero Chern number, so long as the two spin-resolved Chern numbers sum to zero.

Below we discuss this idea and other implications of electron topology, as well as its generalization to 3D systems.

Implications of Topology

One can notice from Fig. 3 that a nonzero Chern number implies a “winding” or “self-rotation” in the structure of the electron wave function. This self-rotation is associated with a physical angular momentum for electrons. For example, if one imagines making a wave packet using states from some particular region of momentum space, one sees that there is a relation between the position (within the unit cell) and the momentum of the states that comprise the wave packet. This relation between position and momentum implies an angular momentum for the wave packet that depends on the local Berry curvature. In this sense the Berry curvature is again like a magnetic field, created by a broken symmetry in the material itself rather than by any external source, in that it gives electrons an angular momentum.

The analogy of Berry curvature to magnetic field can be made more precise by considering the effects of an applied electric field , which accelerates the electron momentum at a rate

, where

is the electron charge. If the local Berry connection

has a finite curl, then, as the electron accelerates, its centroid location shifts in the transverse direction. This shifting of the electron in the transverse direction is called the anomalous velocity, and is given by

.

In this way, the anomalous velocity is like the E-cross-B drift experienced by an electron in crossed electric and magnetic fields. Applying an electric field in a particular direction causes an electron to drift in a direction that is, in part, transverse to both the field and the direction of the (momentum-dependent) Berry curvature, which acts like a magnetic field.

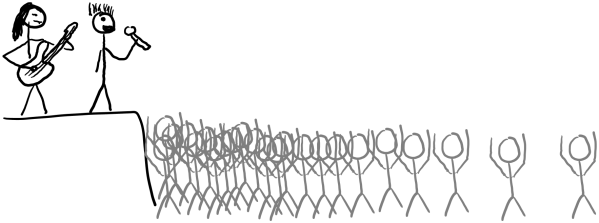

One of the most striking implications of the magnetic field analogy arises from the motion of electrons at the boundary of a sample. In a conductor with no intrinsic Berry phase that is subjected to a magnetic field, electrons near the boundary perform “skipping orbits”, essentially rolling along the boundary in a direction that is defined by the magnetic field direction. No matter how the boundary is shaped, these skipping orbits persist, providing a single conducting channel for current to flow through. In a similar way, the self-rotation implied by a finite Chern number guarantees the existence of traveling edge states. In 2D electron systems with a magnetic field (and sufficiently high electron mobility), the skipping orbit states give rise to the celebrated Quantum Hall Effect, including a quantized electrical conductance whose value is completely universal. 2D materials with finite Chern number have this same universal conductance, even though no magnetic field is present.

In fact, the existence of a topological invariant for electron systems subjected to a magnetic field was first identified by Thouless, Kohomoto, Nightingale and den Nijs (TKNN) [4]. This topological invariant allows for a remarkable universality of the quantum Hall effect among different samples and materials. In fact, the TKNN invariant allows the universal constant to be measured to more than 12 significant figures of accuracy, and it now forms the basis for the metrological standard of the kilogram [5]. The Chern number defined above can be thought of as a generalization of the TKNN result, such that any given material has a particular integer Chern number defined in the absence of any applied fields. Most of the materials we know of have

, and the possibility of 2D materials with nonzero Chern number was one of the seminal insights of the topological age.

There is an important way, however, in which the edge states of a topological material can be different from the edge states of a quantum Hall system. In a quantum Hall system, the magnetic field breaks the time-reversal symmetry of the system, since the magnetic field forces electrons to turn in spiral trajectories with a particular handedness, clockwise or counterclockwise, that is set by the magnetic field direction. These spiral trajectories break time reversal symmetry since playing them backwards in time (without reversing the sign of the external magnetic field) produces motion that is inconsistent with the Lorentz force law.

But it is possible to have a topological electron band, and its associated edge states, even in a material that has time-reversal symmetry. The key idea is to combine broken inversion symmetry in the material with a strong coupling between the electron’s momentum and its spin. For example, in the simplest case of a quantum spin Hall insulator, these two ingredients combine to allow the two different electron spin states to have nonzero but opposite Chern number. To see how this possibility can arise, consider that the time reversal operation also reverses an electron’s spin, so that, under time reversal, a left-going-spin-up electron becomes a right-going-spin-down electron. Thus, a topological electron band can retain time-reversal symmetry if the bands for spin-up and spin-down electrons have opposite Chern number (Fig. 4). Opposite Chern number means that the two spin species have opposite-moving edge states. This locking of the direction of the edge state to the spin is called the quantum spin Hall effect, and it was discovered experimentally in 2007 following its experimental prediction in 2003.

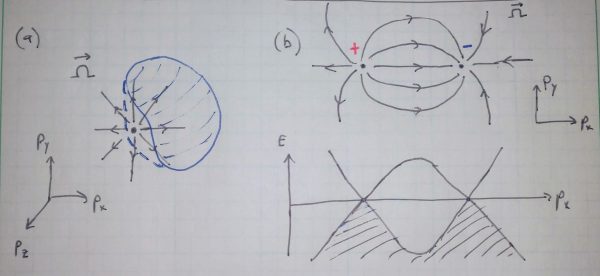

Fig. 4. A quantum spin Hall system has equal and opposite Chern number for its two spin species. (a) The Berry connection winds in the opposite direction for up and down spins, giving

, respectively. The system remains symmetric under time reversal, which simultaneously changes

to

and ↑ to ↓ . (b) Edge states at the boundary of the sample move one spin clockwise and the other counterclockwise around the sample.

Ultimately, the locking of spin to momentum at edge states arises from the microscopic spin-orbit, or “”, coupling that is present in atomic orbitals. Spin-orbit coupling arises when a fast-moving electron experiences a magnetic field in its reference frame due to the electrostatic potential of the nucleus, and the strength of this field varies as the fourth power of the atomic number. In topological materials with time reversal symmetry, strong spin orbit coupling combines with broken inversion symmetry to produce a Berry curvature and a finite Chern number for each spin, even in the absence of a magnetic field.

Topological bands in three dimensions

So far we have only discussed one example of a topological invariant: the Chern number in a 2D band, which gives rise to edge states very much like those in the quantum Hall effect. But a range of 3D materials have been identified with electrical properties that are protected by a topological invariant. These include the 3D topological insulators, which are largely made from narrow band-gap semiconductors with strong spin-orbit coupling [6]. In these materials, an electrically insulating interior coexists with surface states that form 2D metals on every free surface and have a similar locking of the electron’s spin perpendicular to its momentum.

The 2D Chern number discussed above can also be applied to understand another class of 3D materials: the Weyl semimetals. These materials have special points in momentum space where their “topological charge” is concentrated. To see why this happens, imagine defining an arbitrary closed surface S of momentum states within the 3D Brillouin zone of some material (Fig. 5). One can apply to this surface the same arguments about Berry phase that we used for the 2D case, and arrive at the conclusion that the Chern number associated with the surface must also be quantized. In particular,

where, is an integer that depends on the chosen surface

and the integral defines a “flux of Berry curvature” through the surface. Since

is an integer and cannot change continuously, slight distortions of the surface S cannot produce small changes to the integral. The only way for

to change is by a discontinuous jump when

is expanded to include a particular, special point in momentum space. Such points are monopoles of Berry flux, by analogy to the description of electric charges via Gauss’s law, and they always come in pairs of opposite charge. Materials that possess such monopoles are called Weyl semimetals, and the special points in momentum space are Weyl points.

Fig. 5. A Weyl semimetal has monopole sources of Berry curvature and points where two different electron bands meet. (a) The Berry curvature is drawn as a vector field emanating from a special point in momentum space, a Weyl point. A closed surface that does not contain any Weyl points has Chern number

. If the surface is expanded to contain the point, it abruptly attains

. (b) Weyl points come in pairs with opposite charge, located at different momenta (above). At the Weyl point, two electron bands meet in energy (below).

From a materials perspective, Weyl points arise when strong spin-orbit coupling causes two bands of states with different angular momentum to coincide in energy. Since the orbital character of the wave function must change abruptly upon crossing from one band to another, Weyl points correspond to the locations in momentum space where the two bands touch. In the usual metals and semiconductors that comprise the majority of our electronic technologies, such touching of bands is unusual. Typically, electronic bands cannot coincide in energy because of the phenomenon of avoided crossing — the quantum phenomenon of hybridization of degenerate quantum states into symmetric and antisymmetric combinations that have different energies. However, it was recognized as early as 1937, in a paper by Conyers Herring that presaged much of modern topological band theory [7], that two electron bands could meet in energy because of “accidental degeneracies” that prevent the two bands from hybridizing. In these cases, a perturbation that removes an accidental degeneracy can destroy the band crossing point and open a gap. In Weyl semimetals, however, the Weyl points are protected by the quantization of the Chern number. Only a sufficiently strong perturbation, which effectively brings two oppositely-charged Weyl points together, can destroy the degeneracy and open a gap between two electron bands. Thus, a Weyl semimetal is a topologically-protected gapless system (a semimetal). Like the other topological materials, Weyl semimetals have intriguing surface states. In particular, the surfaces of Weyl semimetals exhibit Fermi arcs, or momentum states ranging from one Weyl point momentum to another [9].

Experimentally, the study of topological materials is progressing rapidly, with new compounds, even whole classes of topological materials, being discovered routinely. The initial discovery of 3D topological insulating behavior was made using angle-resolved photoemission spectroscopy (ARPES) in Bi1-xSbx, a mixture of two non-topological semimetals, each with spin orbit coupled bands, that produces a bulk semiconductor for 0.07 < x < 0.22 [12]. Since that discovery, many other topological insulators, as well as Weyl and nodal-line semimetals, have been identified. While ARPES remains a primary tool for revealing electronic band structures, the transport properties of topological materials are also being intensely studied, and raise the possibility of new device functionalities.

What technologies will topological materials enable?

The usefulness of many materials comes from their ability to either pass a current of some kind, or to prevent a current from flowing. For example, copper is useful because it allows electric current to flow freely down the length of a wire, while the polymer encasing the wire is useful because it blocks the current from leaking out. Other examples include materials for passing or blocking heat currents (like heat sinks on computer processors, or the insulating foam on the space shuttle) or filtering light (like the lenses on protective sunglasses, which pass some light frequencies while blocking others). Seen from this perspective, the “silicon age” arose because silicon can act as a kind of switchable valve, an “on/off switch” for electrical current. We know now that pure silicon is a good insulator, but if a “gate” voltage is applied to its surface it becomes an electrical conductor.

Thus, for developing new electronic materials, the main goals are usually filtering and sensitivity. The material should be able to selectively pass or block a generalized current (like silicon can selectively pass electrical current), or exhibit a strong response to some input (like silicon p-n junctions can turn light into electricity). In these questions of filtering and sensitivity, topological materials offer the promise of truly new technologies.

Topological materials can perform interesting kinds of filtering because of their Berry curvature. Since Berry curvature is a kind of winding or handedness that breaks the symmetry between clockwise and counterclockwise motion, topological materials can act like a doorknob, which “opens” when turned in the correct direction and blocks motion in the wrong direction. One striking application is in spin filtering. As illustrated in Fig. 4, the edge states in a topological insulator carry electrons with opposite spin in opposite directions. Such filtering is an essential ingredient for so-called spintronics, which aims to build electronic and computer technology based on currents of spin rather than charge [13]. The Berry curvature also implies that different directions of light polarization (clockwise or counterclockwise) couple differently to the electron material, and this effect can be used to create optical filters or logic circuits [14].

Topological materials are also unusually responsive to many kinds of applied fields, owing to their gapless, topologically protected electron bands. For example, the topological edge states associated with finite Chern number offer the promise of dissipationless current-carrying channels, a potential alternative to superconductors for some applications, perhaps even at room temperature. More generally, the topological protection of low-energy states in a topological electron band can be exploited in a number of ways, providing an advantage over conventional materials where the low energy states are often heavily distorted by disorder.

This topological protection of the electron band structure may be the reason why some topological materials exhibit enormous electrical mobility (i.e., a very large contribution to the current from each mobile electron) [15]. The Weyl semimetals can also have an extreme sensitivity to light, which may yield a new generation of photo-detectors and night-vision goggles [16]. Topological semimetals also promise an unprecedented thermoelectric effect, which is the ability to convert waste heat into useful electric power [17]. Finally, topological electrons have an unusually sensitive response to magnetic fields, including a wide spacing between quantum levels of the electron’s magnetic field orbit (Landau levels), and a strong reduction of electrical resistance when a magnetic field is applied along the current direction (the chiral anomaly) [18].

Whether these effects, or the myriad others that are currently being studied, will revolutionize our current electronic technologies remains to be seen. But what is clear is that ideas from topology have established themselves in materials physics, they have led us to predict and observe new materials and new phenomena, and they are here to stay. Who can tell how we will choose to name our current era in the decades to come?

References

[1] M. Riordan, and L. Hoddeson, Crystal Fire (W. W. Norton & Co., New York, 1997).

[2] L. D. Landau, Zh. Eksp. Teor. Fiz. 7, 19 (1937).

[3] F. Bloch, Zeitschrift Fur Physik 52, 555 (1929).

[4] D. J. Thouless, M. Kohmoto, M. P. Nightingale, and M. Dennijs, Physical Review Letters 49, 405 (1982).

[5] K. von Klitzing, Physical Review Letters 122,200001,(2019).

[6] C. Kane, and J. Moore, Physics World 24, 32 (2011).

[7] C. Herring, Physical Review 52, 0365 (1937).

[9] X. G. Wan, A. M. Turner, A. Vishwanath, and S. Y. Savrasov, Physical Review B 83,205101,(2011).

[10] C. Fang, H. M. Weng, X. Dai, and Z. Fang, Chinese Physics B 25,117106,(2016).

[11] F. Schindler, A. M. Cook, M. G. Vergniory, Z. J. Wang, S. S. P. Parkin, B. A. Bernevig, and T. Neupert, Science Advances 4,eaat0346,(2018).

[12] D. Hsieh, D. Qian, L. Wray, Y. Xia, Y. S. Hor, R. J. Cava, and M. Z. Hasan, Nature 452, 970 (2008).

[13] I. Zutic, J. Fabian, and S. Das Sarma, Reviews of Modern Physics 76, 323 (2004).

[14] K. F. Mak, D. Xiao, and J. Shan, Nature Photonics 12, 451 (2018).

[15] T. Liang, Q. Gibson, M. N. Ali, M. H. Liu, R. J. Cava, and N. P. Ong, Nature Materials 14, 280 (2015).

[16] C. K. Chan, N. H. Lindner, G. Refael, and P. A. Lee, Physical Review B 95,041104,(2017).

[17] B. Skinner, and L. Fu, Science Advances 4,eaat2621,(2018).

[18] A. A. Burkov, Journal of Physics-Condensed Matter 27,113201,(2015).

[19] B. Bradlyn, L. Elcore, J. Cano, M. G. Vergniory, Z. Wang, C. Felser, M. I. Atoyo, and B. B. A. Bernevig, Nature, 547, 298 (2017).

The Physics Olympiad, and finding community

When I was in high school I spent about 2 hours after school every day running track. This was, on the face of it, an unpleasant thing to do. Running is literally painful, and I devoted something like 10% of my waking life to it.

So why did I do so much running? It turns out that there were more or less three reasons. First, I was an ambitious kid, and competitive running provided an outlet for that ambition. Second, I got to experience the joy of acquiring a new skill, and seeing myself improve at something that had previously been difficult. Third, and perhaps most importantly, joining the track team gave me access to a social community. I made friends (including across the normal lines of class and race that tend to divide students), I had adventures, and I matured as a social being.

These are all normal and healthy reasons for doing something that might otherwise seem unpleasant.

I bring this up because last month I spent two weeks at the training camp for the US team of the International Physics Olympiad. The twenty kids at this camp are among the smartest high school physics students in the country, and to arrive at this level they had to devote about 2 hours every day after school to studying physics.

This is, on the face of it, an unpleasant thing to do.

I think that their reasons for studying physics so intensely are similar to my reasons for running track. These are mostly ambitious kids, who enjoy doing something competitive and acquiring a new skill. But what a surprise and a joy it has been for me to discover that physics can also bring high school kids community. These kids (seem to have) made deep and lasting friendships at camp, and I am learning that there is a whole world of physics clubs and camps and internet forums that I was previously blind to. Physics seems to be central to their social life in a way that sports was to me, and is to so many other kids.

And the truth is that studying physics has rewards that are probably more real and long-lasting than the ones that come from sports. Even if these kids don’t become physicists by profession, they will have had the experience of learning difficult but exciting things and solving difficult but exciting problems. And that’s the sort of thing that shapes your opinion about the world and about yourself in a very positive way.

It’s hard for me to discuss the physics olympiad without gushing about the staggering intelligence and technical competence of these kids. They are literally, without exception, 4-5 years ahead of where I was when I graduated from high school. I saw them quickly solve physics problems that I would have struggled with as a grad student, and which even today took me a while to figure out. While preparing the first practice exam in camp, I wrote a problem that I thought was clever and tricky, using some logic I had learned in grad school. And then when I graded the practice exam I found that their median score on the problem was 19/20.

You know, I grew up to be a physics professor. So I can’t even predict what their opportunities will be.

If anything, I worry that these kids are so clever that they will have a hard time emotionally when they first encounter an interesting physics problem that can’t be solved at all. They have become so used to being able to solve tricky and clever problems, that perhaps when they get their first taste of physics research — of encountering a problem that might not even be well-posed, and might not have a solution — it will be difficult for them emotionally. Or, even worse to imagine, they could take it as a sign that they’re not really smart enough to do physics, when in fact the issue is with the problem and not the problem-solver.

A friend of mine (a physics professor, whom I’ll call Val) once told me the story of some of his high school classmates. Val had gone to some kind of magnet school in Croatia, and he had several brilliant classmates. These classmates always seemed to solve physics problems effortlessly, while Val himself struggled continuously. He felt, in the end, that he got through high school physics only by leaning on his friendships like a crutch. Eventually they all went to college together, and the brilliant friends remained brilliant and breezed through everything, while Val continued to struggle with each concept and always felt like he was just barely eking by.

After college they all went to grad school in physics, and suddenly the problems they were working on got truly hard. These problems required months, semesters, or even years of slow and painful thinking, trying many wrong approaches before eventually stumbling on something that worked (or, perhaps, eventually giving up and trying something else instead). For Val, this was natural — this was how physics had always been for him. But for the brilliant friends this kind of frustration and lack of progress was oppressive. They got discouraged, and eventually lost enthusiasm and left physics altogether.

All this is to say, I hope that physics isn’t too easy for these kids. I hope that they love it even when it feels unclear, frustrating, and slow. This way, when it really those qualities in earnest, they can still love it.

Of course, if they leave physics to do something else, that’s fine too.

Crowding around the blackboard to learn some thermodynamics from head coach JJ Dong

Powering through one of the many practice exams

I probably need to address the elephant in the room here: demographics. Of the 20 students who qualified for the physics olympiad camp (on the bases of two rounds of exams), 16 were Chinese-American, 3 were Indian-American, and one was Caucasian. There were 19 boys and 1 girl.

But racial and gender biases weren’t the only demographic trends on display. There was also an overwhelming representation of private schools and magnet schools, even though the vast majority of high schoolers in the USA attend non-specialized public schools. Certain “powerhouse” schools, in particular, seem to have multiple students qualify for camp every year.

As far as I can tell, these biases in representation come down to who is being encouraged to study physics, and who is being invited into the corresponding communities of physics. When I was in high school, for example, I had no idea that competitive physics was a thing, much less that there was a world of clubs, camps, and internet forums devoted to it. Maybe if I had known I would have chosen my hobbies differently.

At the moment this information seems to pass through certain communities of parents by word of mouth, and then the parents in turn motivate their children. Part of the problem, then, is on us, the coaches and organizers of the physics olympiad. We need to do a better job of getting the word out, and making people aware of the fact that there is a whole world of recreational physics (and recreational math and science, more broadly) available to kids. It can be as rewarding and engaging as any other hobby, and any other community.

But part of the problem is more intractable then a simple increase in advertising. If, as a teenager, you want to become a great runner, you probably need access to a good team and a good coach. So it is with physics, too: you will be hard-pressed to become a great practitioner of competitive physics unless there is a community in your school that is ready to give you training, friendship, and opportunity.

So perhaps this blog post can serve as an exhortation both to students and to teachers/administrators. Be aware that there is a whole world of recreational/competitive physics out there, and this is a world that is challenging, exciting, and comes with long-lasting benefits. Think about finding a way to engage with this world, or helping to open a path that enables others to do so.

For more information about the USA Physics Olympiad program, read this page: https://www.aapt.org/physicsteam/2019/program.cfm

You can read about the 2019 USA Physics Olympiad team here: https://www.aapt.org/physicsteam/2019/team.cfm

The 2019 Physics Olympiad (in Tel Aviv, Israel) is currently wrapping up. The results should be announced very shortly. But for the moment I can say that all five members of the traveling team performed brilliantly!

UPDATE: The results are released: https://www.ipho2019.org.il/results/

Congratulations to gold medalists Vincent Bian and Sean Chen, and to silver medalists Edward Lu, Albert Qin, Sanjay Raman!

Physics goes to Washington

The camp ended with everyone treating their physics textbooks like yearbooks

What it means, and doesn’t mean, to get a job in physics

I have some reasonably momentous personal news: I got a job.

And I don’t just mean that I got a job, in the same sense that I’ve been employed doing research ever since getting my PhD. I got the job: the ostensibly permanent faculty position that so many of us have aspired to (and agonized over) since undergrad.

I have accepted a faculty position in Physics at Ohio State University. I’ll begin in January.

It isn’t my intention to brag here. But I should probably make the point that this job is a big deal for me. OSU Physics is an extremely good department, with great students and something like ten faculty members whose work I admire and whom I am eager to learn from. When you’re angling for a faculty position, you can really only hope to end up with a couple of colleagues like this, so for me this job is an embarrassment of good fortune. It’s not an exaggeration to say that this is exactly the kind of job I’ve dreamed of having ever since I decided that I wanted to be a scientist. I am very excited.

But I should also make the point that getting this far was difficult, long, and not particularly likely. My goal in writing this post is mostly to give a postmortem dissection of my career trajectory, for the benefit of current students who are thinking of following a similar path. I want to try and point out, as honestly as I can, which things I did right, which things I did wrong, and the ways in which I got lucky that enabled me to finally have a secure career in academic science.

A caveat: there is a risk when writing this kind of reflection of building up the tenure-track faculty position as some kind of ideal. That is, there is a danger of making it sound like getting a faculty job is “making it” while other options are “failing to make it”. I don’t mean to do that. There are lots of exciting things to do with a physics degree besides going on to be a physics professor. And there are plenty of people who were smarter than me and/or better at physics than me who went a different direction. To name a few, some friends and colleagues of mine went on to be: research scientists and engineers, data scientists, software engineers, financial analysts, technical writers, science journalists, teachers, and intelligence analysts. Many (perhaps even most) of these careers might be more rewarding or more challenging than the standard tenure-track professor job. But I can only comment on the path I followed myself.

Beware: this is probably the longest post I have ever written.

What are the odds?

I remember, as an undergrad, deciding more or less immediately that I wanted to try and be a physics professor. It was an exciting thought, but the one that immediately followed it was: “what are the odds?” I remember looking through the faculty roster at my university and seeing everyone’s listed undergraduate and graduate alma mater. It was pretty much a parade of Harvard, Princeton, MIT, Caltech, Stanford, Berkeley, MIT, etc. “Well,” I thought, “I’m at Virginia Tech. So what does that mean about my chances?”

From that moment on I pretty much operated under the assumption that I wouldn’t achieve my goal. But I decided that it was worth trying anyway, because I would at least get to have a physics-themed adventure along the way. And once you get a PhD in physics people seem to generally believe that you’re a smart person, and are willing to hire you for a range of different technical jobs (which is true; see the list of alternate jobs above). So my plan was to go to grad school in physics and then reassess from there, with the expectation that I would probably end up in some kind of non-academic, technology-oriented job.

The basic timeline of my academic path is like this:

- I started college at Virginia Tech in the fall of 2002, and graduated in the spring of 2007 with degrees in physics and mechanical engineering. (In high school I had thought I wanted to do robotics, and in college I was too stubborn to drop the mechanical engineering degree that I initially declared.)

- I started grad school at the University of Minnesota in the summer of 2007, and completed my PhD in the summer of 2011. (This is an unusually short duration; see comments below.)

- I stayed in Minnesota for two years as a postdoc, then went to Argonne National Laboratory in 2013 for a second postdoc. I spent two years there, which included applying for a number of faculty jobs that I didn’t get.

- I started a third postdoc at MIT in 2015. I applied extensively for faculty jobs during my time at MIT, but I wasn’t able to find a position before my appointment ran out in 2018. I was fortunate enough to find someone else willing to pay for me for an additional year, and in March of 2019 I landed the job at Ohio State.

So the chronological recap is:

- 5 years of undergrad

- 4 years of grad school

- 8 years of postdoc (at three different locations)

It’s a long road, friends.

I would say that, in my field, 8 years as a postdoc is longer than average. And, in fact, I was more or less considering that this year was my last chance. In an email, my PhD advisor had warned me that “your career will not survive another postdoc”, and he was probably right.

But 8 years is also not some extreme outlier. The average time spent as a postdoc (again, in my field) might be something like two postdocs and 5 or 6 years. Three postdocs is usually considered an upper limit, and people who don’t have a permanent job by the end of their third postdoc are often passed over or viewed with suspicion.

Along the way I had to make many, many job applications. Here’s the total count, along with the year of applying (in parentheses):

- 10 grad school applications (2007)

- 1 (2011) + 12 (2013) + 8 (2015) = 21 postdoc applications

- 1 (2012) + 16 (2015) + 7 (2017) + 33 (2018) + 42 (2019) = 99 faculty applications

- 1 (2012) + 3 (2015) + 6 (2018) + 5 (2019) = 15 faculty interviews

I probably don’t need to say that applications are exhausting and dispiriting. Faculty applications, in particular, are very time-consuming, and an actual faculty interview is brutal. My general rule of thumb is that during any year in which you are applying for a new (academic) job, you will lose about a third of your total productivity to the process of applying and the stress of worrying about how the application will turn out. Add together the years above and the implication is that I lost something like two solid years of my life to applications.

With each year of failure on the faculty job market, I got a little more anxious and a little more desperate. During the last two years, I often had to specifically justify why so much time had passed since my PhD. For example, during a Skype interview this year I was asked directly by the committee “It’s been a long time since your PhD; why don’t you have a job yet?” (they did not invite me for an in-person interview). More than once someone called my PhD advisor to ask for a justification as to why so much time had passed since my PhD.

In the end, I got a great job at a great institution. But I had to endure nearly 100 rejections and ten failed interviews first. There were many moments along the way when I thought I was looking at the end of the line.

I once heard it said (by a tenured professor) that there’s no point in stressing about jobs, because in the end all the “good people” get faculty positions and everyone else winds up with a lucrative tech-related career. This kind of dismissive and self-serving narrative seems completely inconsistent with my own observation. Luck seems to play as big a role as anything else.

In the remainder of this post I want to spell out the many ways in which I was the beneficiary of luck and kindness from others. But let me first try to be at least a bit positive and constructive, and outline the things I think I did correctly.

What I did right

I prioritized conceptual understanding over technical skill

When you’re a young student or postdoc, your first years are usually marked by a long struggle to gain some technical skill or competency. As soon as you attain this skill at the level required to produce publishable research, it’s very tempting to just rush to apply the skill to all the problems you can find. This kind of approach maximizes your instantaneous productivity at a time when you feel desperate to be as productive as possible.

But ultimately this is a dangerous approach. Because in order to get a job, you need to impress people in person, and not just on paper. And what impresses people in person is the ability to understand what they are working on, to ask intelligent questions, and to teach them some idea that they didn’t previous understand. If you can’t do this, and you instead come across as a “narrow professional” in an interview or a discussion, then people can be dismissive of you as a scientist.

In this sense I did the right thing by prioritizing a broad, conceptual understanding of physics over a narrow and virtuosic expertise. In my case this was sort of an accident; I did the former because I had a short attention span and easily got bored by doing the same thing repeatedly. It turned out, in the end, to be a good career move.

I learned how to talk about physics, in addition to learning how to do physics

The great theorist Anatoly Larkin used to say that there are two kinds of physics: written physics and oral physics. What I think he meant is that there are two distinct skills you need to acquire as a scientist: (1) the ability to do calculations or experiments, and (2) the ability to talk conceptually about science with your peers. As a student you often feel like the second skill will come naturally once you acquire the first. That is, you think that once you can produce science you will naturally be able to talk about it clearly with others.

But this isn’t true. Getting good at talking about science requires a concerted effort. You have to work and practice to be able to describe things to others in their simplest terms, or be able to make analogies and construct clear examples, or be able to approach an idea from multiple perspectives in case the first perspective doesn’t take. Without these skills your scientific career will almost certainly fall apart sooner or later, because “oral science” is the only way to impress people and forge collaborations.

Luckily for me, this skill was something I prioritized, mostly because I thought talking about physics was so much more fun than doing calculations. In fact, I created this blog (almost exactly ten years ago, during my second year of grad school) mostly as an outlet for my desire to “talk about physics” as distinct from “doing physics”. I’m very glad that I did.

I worked hard to make good talks

A common piece of advice given to grad students and postdocs is “until you get a permanent job, treat every talk like a job talk.” I’m not sure that this is a helpful thing to say, since it’s inclined to make you feel nervous and pressured at a moment when you need to feel relaxed. But it is true that anytime you give a talk you are building your reputation a little bit, and you are building up skill for a future job application. So take your talks seriously.

For me personally, a good talk is one that I learn something from. So when I’m designing a talk I always try to have at least one moment where I explain/derive some result in a clever or striking way. This “clever result” doesn’t have to be something that came out of my own research; it can be someone else’s idea, old or recent (and you should, of course, generously credit the person who originally came up with it). But the best way to make a scientist like you is to teach them something in a clear and clever way. Don’t pass up that opportunity lightly.

The best talks also have a narrative flow to them. In particular, they clearly set up a dilemma before resolving it. Before you tell the audience whatever new result you have, you need to make them feel uncomfortable about not knowing it. Don’t let your talks be just a summary of what you did.

I made friends in physics, and I put a lot of effort into maintaining those friendships

This may seem a little cynical, but it’s absolutely true: your friendships in science matter enormously to your career success. The people in your field who like you are the people who will provide you with opportunities – invitations to give lectures, invitations to conferences, opportunities to collaborate, positive reviews on your papers, etc. Having friends also just makes the process of doing science more fun.

I am not naturally a socially skilled person, so my method of making friends usually exploited the one interest I knew we all had in common: physics. Many of my friendships started by striking up a conversation about physics. Teaching someone an idea in a clear way is a great way to make a friend, but so is asking them to teach you.

I approached well-known, established people for mentorship and collaboration, and I tried to do good work for those people

This one mostly comes down to courage. Any field has its famous people, who are known for some body of great work. It’s easy to feel intimidated by these people, or to feel like you shouldn’t bother them. But if an opportunity comes to discuss science with such a person, or to collaborate scientifically, you almost have to take it. Eventually, to get a real job, you need to have (multiple) well-known people write you good letters of recommendation. The only way to get there is to boldly take the opportunities to work with those people whenever you get the chance.

Just remember, of course, to have the requisite humility. Be confident about the things you know how to do, and you should even be willing to “teach” some great person where you are able. But don’t ever pretend to understand something you don’t. Don’t pontificate and don’t pose. Most great scientists are happy to explain things, even if they seem embarrassingly basic to you. But they probably won’t tolerate posers.

When I found people who were smarter than me, I tried to learn from them

As an early-career scientist, you will continually find yourself running into people who are both younger and smarter than you. Given the omnipresent job anxiety, it’s easy to let these people make you feel deflated, anxious, or even resentful. Resist those urges as much as possible, and instead try to get these people to teach you things that you don’t know. This kind of earnest friendliness is a wonderful thing for them and for you. Some of my best friendships in science have been made this way.

I was generous, open, and friendly, and avoided being competitive or proprietary

This one can feel surprisingly hard, because there are so many great people competing for a very limited number of permanent jobs. So you can easily feel pressure to be overtly competitive with your peers – anxiously guarding your work away from them, competing for the attention of famous professors, or even “stealing” problems from others. But this kind of behavior will put you on people’s bad sides very quickly. On the other hand, being generous with your time and labor, open with your results, and friendly toward everyone will help you make much-needed friends.

I made an effort to be creative

In some fields, and in condensed matter physics in particular, there are topics that suddenly get “hot”, and a huge fraction of people suddenly start working on the trendy new topic. This leads to a deluge of work that is done quickly and obviously: people want to be first to establish some new result or stake some claim before others do. And the truth is that you probably need to spend some time doing this kind of work (see another comment below). But I personally tried to set aside at least some time to do creative work that wasn’t directly aligned with any trend or established field. This work wasn’t always cited very well, but I think that in the end people respected me for it. And it allowed others to see that I was someone who was willing to think creatively and across fields. While such broad-mindedness is often a secondary consideration in hiring decisions, it is a purely positive one, and it’s the sort of thing that people like to have in a colleague.

I was willing to sacrifice from my personal life and my personal relationships when necessary

This point is the saddest one to discuss, but I am trying to be as blunt and honest as possible.

I have been married for ten years. But I have lived apart from my wife for three of those years. If I hadn’t been willing to sacrifice from my marriage in this way – if I had insisted that I can only take jobs in cities where my wife is also employed – then I would almost certainly not still have a viable academic career.

This state of affairs is unfortunately very typical in academic science, unless one of two people in the relationship make a decision to abandon much of their career ambition and follow their spouse.

What I did wrong

I finished my PhD quickly

This one feels counterintuitive, but it’s an important point.

The first few years of my PhD were atypically productive, thanks to an unusually fortuitous match with my PhD advisor (more on this below). Three and a half years or so after entering grad school, I had authored or coauthored something like 12 published papers. So my advisor and I both decided that I had enough work to defend a PhD thesis, and I graduated after my fourth year. I was angling to stay in Minnesota longer while my wife finished her degree, so I transitioned smoothly to a postdoc with the same group.

Graduating “early” like this seems like a uniformly good thing to do. But it isn’t. The reason is that when you apply for future jobs people will judge your productivity on a sliding scale, with increasingly high standards based on how many years have passed since your PhD. On the other hand, no one really pays attention to how long the PhD lasted. So, all other things equal, a candidate who publishes 16 papers in their PhD looks significantly more impressive than a candidate who publishes 12 papers in their PhD and another 4 in their first postdoc, even if the two candidates started grad school at the same time.

So my advice is this: if you find yourself being very productive in the later years of your PhD, and if your goal is to get a faculty position, then draw those years out as long as you reasonably can. Be productive, write papers, learn a lot of things, give talks, etc., as a grad student. Because when people judge you, you want them to be able to think “wow, that person is such a great scientist, and they’re still just a grad student!”

I avoided fashionable topics

I know, I know, this one sounds like the most self-serving excuse for not having highly-recognized work. (My publication record at the time of being hired is decent, but probably below the level that is typical for a faculty hire at an Ohio State-level university.) There is a very common (and annoying) complaint among scientists: “I did responsible and deep work, but I never got the recognition I deserved because it wasn’t trendy at the time.”

In my case, though, I can’t claim that I avoided trendy topics because I was doing “deeper” work, necessarily. There were other reasons for my reluctance to jump into fashionable and fast-moving fields. And if I’m being honest, these reasons are not particularly flattering.

One reason for my reluctance was a kind of intellectual anxiety, or a lack of confidence. When a field is just emerging, everything feels new and confusing, and it can be very intimidating to try and jump in. You feel overwhelmed by how much you don’t know, and rather than buckle down and try to learn it all, it’s easy to just stay away and work on things you already know. But this is a missed opportunity. A field that is developing rapidly is also a field where people are learning rapidly, and if you stay away you will probably be learning less than you could be.

I think also that I abhorred the lack of clarity that predominates in a developing field. There is a sudden torrent of papers that make confused or contradictory claims; people rush to do experiments that aren’t properly controlled or properly understood; people rush to do calculations that are based on questionable assumptions. I hated wading through all that muck, and I allowed myself to justify staying away from it because I didn’t want to have to do the work of generating a clear perspective for myself.

This was also a missed opportunity, both to grow as a scientist and to establish myself as someone who is capable of creating clarity in a field where it was previously lacking.

I didn’t spend enough time reading new papers

There is a website called the arXiv, on which people post drafts of their new scientific papers, usually before submitting them to a journal for review. The arXiv is sort of the lifeblood of current events in physics. Most established physicists scan through the arXiv every night, catching up on the latest developments and looking for inspiration.

I tried to maintain an arXiv-reading ritual, but the truth is that I hated it. Looking through dozens or hundreds of papers every night, most of which seemed confusing, incomprehensible, and/or completely boring, was too hard for me emotionally. It made me feel overwhelmed, and I would start questioning why I was doing this and why I was in this profession at all. Eventually I just gave up, and I never really developed any kind of routine for reading new papers as they came out. I fell back on merely going to talks and conferences, talking to people at lunch, and googling things as needed.

This reluctance to read papers cost me significantly. I was usually late to learn about new developments, and I missed many opportunities to collaborate with others or provide them with information or references (including to my own work) that would have been helpful.

I allowed my work to become scattered, rather than focusing on a single field

When it comes to science, I have a bit of a short attention span. A scientific field is always most exciting to me when I am just learning its central ideas, and once I understand those I am easily distracted by some other field.

This tendency isn’t bad, necessarily, since it leads to rapid learning and creative thought. But when you are a young scientist you need for some community to recognize your work, and to know you personally. In my early years I made the mistake of scattering my work across many disconnected scientific communities. Suddenly, I found myself four years past my PhD and I realized that there was probably no scientist alive who would have read more than 3-4 of my 25 papers. This was a problem, and it probably delayed my employment significantly.

I insisted too often that people explain things in my terms, rather than learning to understand things in their terms

I realized relatively quickly that I had a “style” of doing physics. I had a particular way of thinking about things, which was based on intuitive pictures and simple math. This is not a bad thing; I have come to realize that my style has real value, and many people appreciate it.

But a lot of the time I would insist on thinking only in this style. When someone was trying to explain some idea to me, I would insist on parsing it in this way, asking lots of questions and forcing them to rephrase what they were saying until I could understand it and derive it in my natural language.

While understanding things in your own terms is probably essential to learning, it’s also true that I should have put in the work of learning how to think in multiple different ways at once. There were a lot of popular, “formal” ways of thinking in theoretical physics that I was slow to develop, to my detriment.

I didn’t prioritize my career over my wife’s career

This point, again, is difficult to admit, and even embarrassing, both for myself and for my profession.

Starting with graduate school applications, my wife and I made career decisions jointly. We always tried to weigh the options and choose the one that maximized the combined net benefit to her and to myself. To me this is the obviously correct, human way to behave in any kind of partnership. But in some cases the human approach meant that I didn’t get my first option, and in an ultra-competitive field that always made me more than a little nervous.

I don’t want to overplay this point, because in the end I got a very good job, and it’s hard to imagine that I would have done significantly better in some alternate timeline. And I’m more than grateful for the sacrifices that my wife made for the benefit of my career, and for all the times when she didn’t get her first choice. But it’s also true that by the time I arrived at my postdoc at MIT, most of my peers were either single or had partners who had agreed to subordinate their own career ambitions to that of their physicist partner. It is usually true, with some rare exceptions, that if you want to be a professor you don’t get to choose where you will live, and that means that someone’s career will probably have to take priority.

Ways in which I was lucky

I was uniformly encouraged

I was something like 8 years old when I first thought it would be cool to be a scientist. From that point onward, I would occasionally tell people that this was my aspiration and I don’t remember anyone ever giving me a single discouraging word.

It wasn’t until adulthood that I realized what a big deal this is. When I told people that I wanted to be a scientist, and they were encouraging, it allowed me to believe in the reality of the future I wanted. When difficulties inevitably arose, I was able to view them as simply difficulties, rather than as evidence that I wasn’t intrinsically good enough.

I had undergraduate advisors who cared more about my future than about their own research

As a freshman in college, I picked a research advisor almost at random. I asked the guidance counselor which professors wanted undergrad researchers, and then I went to the first person on the list. That the first name on the list was Beate Schmittmann was maybe my first really lucky break in physics.

Dr. Schmittmann was unusual in that, in her interactions with undergrads, her only real motivation was to introduce them to research and give them opportunities. Amazingly (in retrospect), she never tried to use my (inconsistent) labor to advance her own research. She introduced me to physics ideas, took me to conferences, and helped me through applications without ever asking for publication-quality work from me.

And I had a number of other professors who treated me this way: Dr. Bruce Vogelaar (also at Virginia Tech) introduced me to experimental particle physics, and gave me a number of really wonderful opportunities even though I was ultimately terrible at both particle physics and performing experiments. I did summer internships at MIT and at CERN, and it is really humbling in retrospect that anyone devoted any kind of resources to someone as inept as I was.

I ended up with a PhD advisor who (1) was famous, (2) really cared about teaching me, and (3) worked relentlessly to find me opportunities

By far the biggest piece of luck I had was in who I had as a PhD advisor. When you’re an undergraduate applying to grad schools, you have no understanding whatsoever of what makes a good advisor, or who the people are at the schools you’re considering. For the most part, you just look through people’s websites and see if they have any words or pictures that you like.

When I arrived at the University of Minnesota in the summer of 2007 I didn’t really know anything about the people there. I ended up working with Boris Shklovskii almost randomly – I chose condensed matter theory, and he was the first person who reached out to me. I also liked the titles of some of his papers.

But it turns out that who you have as a PhD advisor is the single biggest determiner of your academic success. Ideally, you want someone who is well-known and well-connected, who will provide you with good topics to work on and opportunities to make yourself known, and who will take time to teach you. It is rare to get all three of these things, and I was extremely fortunate to have all of them.

Boris is a rare and singular person in many regards. He is such an uncommonly clear scientific thinker, and he devoted an enormous amount of his time to me personally. All I can say is that I am exceptionally fortunate and exceptionally grateful for my time as his student, and I will have to write a proper post about him as a scientist some other time.

It turns out, though, that even having an advisor with all these qualities is not enough to make your PhD successful. You also need your advisor’s style of thinking and working to be compatible with your own. And it happened that Boris had his own unique style, which was remarkably closely aligned with the way I wanted to think about physics. So my good luck in that regard is really remarkable.

I went to the Boulder Summer School and made lots of friends, many (most?) of whom remained in academia

The biggest month of my early career came in the summer of 2013, when I attended the Boulder School for condensed matter physics. This was a month-long summer school, during which PhD students and postdocs could attend special lectures while living together in a dorm at the University of Colorado.

It is hard to overstate how important that summer was for me. Not necessarily because of the lectures (from which I learned much but retained relatively little), but because I suddenly found myself surrounded by exceptional young scientists, most of whom are still in academia. To be in that environment was so exciting, that all I wanted to do was hang out and talk about physics with them all day. It was during that summer that I really learned the joy of making friends with someone by teaching each other physics. We also did adventurous things, too, like go hiking and camping, but the real joy was the physics itself. Many of my best friends were made that summer.

I don’t know whether every year at Boulder School is like that, or whether I was part of an exceptionally good group. But if you are a student or postdoc, and you have the opportunity to go to a summer school like this one, I can’t urge you strongly enough to go. Go, make friends, and talk about science all the time. It will pay dividends for a very long time.

I got a postdoc position with an independent travel budget, and I used it to my full advantage

My postdoc at Argonne National Laboratory started in the fall of 2013, and it came with an unusual perk: a $20,000-per-year discretionary budget. This is extremely rare for a postdoc, and is probably a vestigial remnant of an arrangement that was originally designed to provide an experimentalist with equipment.

But I took full advantage of that budget. I traveled to give seminars at places that wouldn’t otherwise have had a budget for it. And I treated myself to a whole range of conferences both foreign and domestic. It was a great way to introduce myself to the wider scientific world, to make friends and to make myself known. And I also got to add a disproportionate number of “invited seminars” to my CV.

Someone was kind to me and gave me a postdoc job when I was floundering

In the spring of 2015 I was in something of a panic. Despite my rampant (mis)use of government funds, I couldn’t find a job. And to make the matter even more difficult, my wife was going to medical residency, which is governed by a tyrannical and completely non-negotiable matching algorithm. Predicting the outcome of the match is a hard thing to do, but after some agonizing it seemed like she was likely to be sent either to San Francisco or to Boston.

I think this was the first time when I really thought that I was done for. The outcome just looked too bleak: I couldn’t bear to be apart from my wife any longer, and I couldn’t see any way to get an academic job in either of those cities.

One night I was despairing to a Boulder School friend of mine who was a grad student at MIT, and he said “why don’t you just ask my advisor for a job?” This had never occurred to me. My friend’s advisor was an intimidating Russian theorist who was widely regarded as a genius. I had, in fact, had a few good (but short) conversations with him, at a couple conferences and during a self-invited visit to MIT. But I never expected that he would deign to hire a (very) non-genius like me.

Nonetheless, I wrote to him one night and said [verbatim] “I find myself these days coping with a sort of tricky two-body problem, and recent developments are making me very motivated to find a job somewhere in Boston. … Do you know of anyone in Boston who is looking for a postdoc that might be interested in me?”

The very next day he said “I’ll make some inquiries and get back to you”, and within two weeks I had an offer letter for a postdoc at MIT.

I can hardly tell you what a miraculous event this was. My wife and I got to live together, and I got an office on the Infinite Corridor at MIT.

I should admit at this point that I had harbored an unrequited crush on MIT for a long time. Up to that moment, I had applied to be at MIT five times – for undergrad, for grad school, for a summer program, and for two postdoc positions – and had been rejected every time. In the end I got a position that required no application at all: just an email and a bit of nepotism.

I befriended people at MIT who became great scientists

The year I arrived at MIT there was an unusual cohort of brilliant and friendly young postdocs. I became fast friends with many of them, and have developed friendships that I truly cherish. I learned enormously from them, wrote papers with them, sang karaoke and went on hikes with them, and I can’t tell you how excited I am that I get to have a scientific career in parallel with them. Most of them have now gone on to faculty positions of their own.

Someone was kind to me and kept me on when I was about to fail out

I should say, finally, that even after making it to MIT it was not at all clear that I had “made it” into a permanent scientific career. During my second year at MIT I applied to seven faculty positions and didn’t get a single positive word in return; not an interview or a phone call. I tried again the next year, ostensibly the last of my three-year postdoc, and applied essentially everywhere that had even a remote chance of working for both me and my wife. The initial returns seemed good: I got six interviews at six good universities. But in the end I was not quite good enough anywhere, and after a protracted period of “maybe” from a few schools (and a few quicker rejections from others), I found myself completely without a job, seven years past my PhD, and with just a few months before my current job expired.

At this point I really thought the game was up. I was preparing my exit strategy, and trying to line up interviews in the private sector. But a different professor happened to hear about my predicament, and he offered to pay for me for one more year. This gave me one last chance at the academic job market, and the rest, I suppose, is history.

What to make of it all

If you are a young grad student or postdoc in science, reading this (overlong) account, I don’t know how you should feel. On the one hand, my career arc thus far has been a great adventure. If I saw someone else getting excited about the prospect of such an adventure, it would be easy for me to get excited with them.

But this arc has also been a difficult and very tenuous journey, generously supported by good fortune and by kindness from powerful people at just the right moments. If your decision upon reading this account is to avoid the whole mess altogether, then that seems as rational to me as anything else.

Let’s talk about a small question as a way of introducing a big question.

How thick is the atmosphere?

How far does Earth’s atmosphere extend into space? In other words, how high can you go in altitude before you start to have difficulty breathing, or your bag of chips explodes, or you need to wear extra sunscreen to protect your skin from UV damage?

You probably have a good guess for the answer to these questions: it’s something like a few miles of altitude. I personally notice that my skin burns pretty quickly above ~10,000 feet (about 2 miles or 3 km), and breathing is noticeably difficult above 14,000 feet even when I’m standing still.

Of course, technically the atmosphere extends way past 2-3 miles. There are rare air molecules from Earth extending deep into space, becoming ever more sparse as you move away from the planet. But there’s clearly a “typical thickness” of the atmosphere that is on the order of a few miles. Altitude changes that are much smaller in magnitude aren’t noticeable, and altitude changes that are much larger give you a much thinner atmosphere.

What physical principle determines this few-mile thickness?

At a conceptual level, this is actually a pretty simple problem of balancing kinetic and potential energy. Imagine following the trajectory of a single air molecule (say, an oxygen molecule) for a long time. This molecule moves in a sort of random trajectory, buffeted about by other air molecules, and it rises and falls in altitude. As it does so, it trades some of its kinetic energy for gravitational potential energy when it rises, and then trades that potential back for kinetic energy when it falls. If you average the kinetic and potential energy of the molecule over a long time, you’ll find that they are similar in magnitude, in just the same way that they would be for a ball that bounces up and down over and over again.

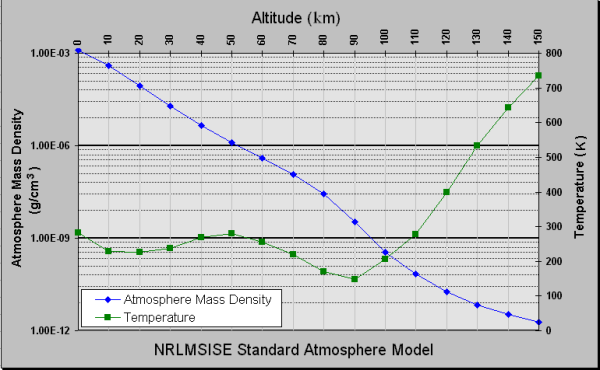

There is actually an important and precise statement of this equality, called the virial theorem, which in our case says that

where is the average

potential kinetic energy in the vertical direction and is the average potential energy.

The gravitational potential energy of a particle of mass is just

and the typical kinetic energy of the air molecule is related to the temperature, (this is, in fact, the definition of temperature):

,

where is Boltzmann’s constant and

is the absolute temperature (i.e., measured from absolute zero). On the earth’s surface,

is about 25 milli-electronvolts, or

Joules.

Using these equations to solve for gives

, which is about 5 miles.

Everything makes sense so far, but let’s ask a more interesting question: What is the function that describes how the thickness of the atmosphere decays with altitude? In other words, what is the probability density for a given air molecule to be at altitude

?

Let’s take a God-like perspective on this question [insert joke here about typical physicist arrogance]. Imagine that you could choose some function from the space of all possible functions, and in order to make your choice you must first ask the question: which function is best?

“Best” may seem like a completely subjective word, but in physics we often have optimization principles that let us define the “best solution” in a very specific way. In this case, the best solution is the one with the highest entropy. Remember that saying “this state has maximum entropy” literally means “this state is the one with the most possible ways of happening”. So what we are really searching for is the function that is most probable to appear from a random process.

The entropy of a probability distribution is

,

This is a generalization of the Boltzmann entropy formula (which is a sufficiently big deal that Boltzmann had it engraved on his tombstone).

Now, there are two relevant constraints on the function . First, it must be normalized:

.

Otherwise, it wouldn’t be a proper probability distribution.